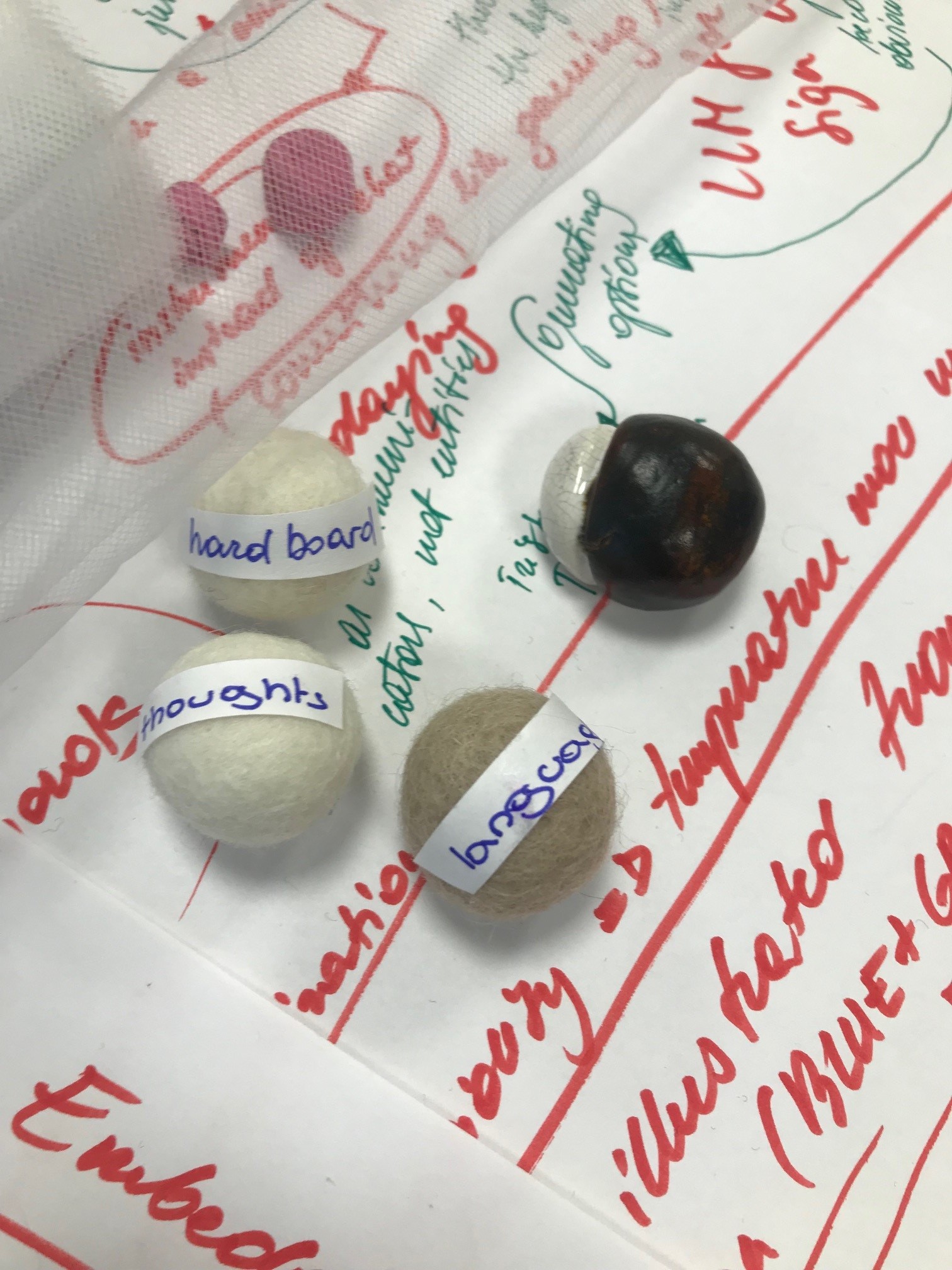

Tangible and embodied interactions – can they offer something for the way users understand and engage large language models (LLMs)? This is the question that we explored in studio (a.k.a. hands-on workshop) titled “Tangible LLMs: Tangible Sense-Making for Trustworthy Large Language Models” that PIT, namely […]

Feeling AI: ways of knowing beyond the rationalist perspective

Felt AI is a collaborative project between myself (Goda Klumbytė), design researcher Daniela K. Rosner and artist Mika Satomi. Throughout the last year and a half, the three of us have been meeting regularly to probe, discuss and research together how can one feel AI. […]

Algorithms + Slimes: Learning with Slime Molds

In summer 2024, Goda Klumbytė and artist Ren Loren Britton explored algorithms and slimes in a joint residency at RUPERT, Centre for Art, Residencies, and Education in Vilnius, Lithuania. These are some brief notes on what we learned and how we sought non-extractive ways of […]

After Explainability: Directions for Rethinking Current Debates on Ethics, Explainability, and Transparency

EU guidelines on trustworthy AI posits that one of the key aspects of creating AI systems is accountability, the routes to which lead through, among other things, explainability and transparency of AI systems. While working on AI Forensics project, which positions accountability as a matter […]

Doing Diversity in Computing

Based on their commitment to diversity, equity and inclusion in computing, the Association of Computing Machinery has put forward a list of considerations to forming diverse teams. This list includes inherent characteristics such as race and ethnicity, gender identity and disability, as well as acquired […]

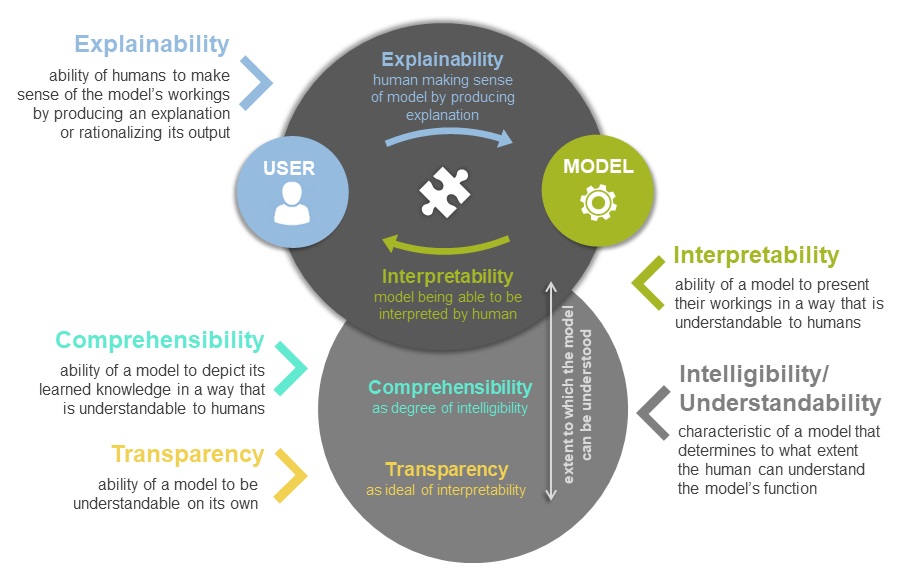

Messy Concepts: How to Navigate the Field of XAI?

Entering the field of explainable artificial intelligence (XAI) entails encountering different terms that the field is based on. Numerous concepts are mentioned in articles, talks and conferences and it is crucial for researchers to familiarize themselves with them. To mention some, there’s explainability, interpretability, understandability, […]

Impressions from “Shaping AI”: Controversies and Closure in Media, Policy, and Research

On January 29th and 30th 2024, a small but international group of scholars, civil society representatives, and practitioners came together at the Berlin Social Science Center to discuss the controversies, imaginaries, developments, and policy solutions surrounding AI under the name “Shifting AI Controversies – Prompts, […]

Gendered Images of Computer Science

When thinking of computer science – what images pop into one’s head and what can those images tell us about the discipline as well as interconnected societal expectations and norms? In this short post we report on our research into gendered codings of computer science. […]

Thoughts from the PIT: Introducing Lea Stöter

I have recently visited my first academic conference – the closing event of a project titled “Shaping AI” – and on the train journey back, after two days of presentations, soaking up knowledge, attempts at networking, and questions-turning-monologues, I left with one question: “Can this […]

Bayesian Knowledge: Situated and Pluriversal Perspectives

November 9 & 10, 2023 09:00–12:30 BST / 10:00–13:30 CET / 04:00–07:30 ET / 20:00–23:30 AEDT Hybrid workshop (online + at Goldsmiths, London, UK) This workshop examines potential conceptual and practical correlations between Bayesian approaches, in statistics, data science, mathematics and other fields, and feminist […]