EU guidelines on trustworthy AI posits that one of the key aspects of creating AI systems is accountability, the routes to which lead through, among other things, explainability and transparency of AI systems. While working on AI Forensics project, which positions accountability as a matter of interpretability, our team started looking into explainable AI and its design, particularly the possibilities of designing explainable AI with diversity, inclusion and social justice in mind. According to Guilia Vilone and Luca Longo, recent literature shows that one of the principal reasons to produce explanations is to gain trust of the users and to produce causal explanations. According to research they quote, causal attributions are essential to human psychology and behavior, thus making causal explanations essential to explainability. However, Vilone and Longo note, ” Data-driven models are designed to discover and exploit associations in the data, but they cannot guarantee that there is a causal relationship in these associations.” Thus, explainability becomes a kind of additional method to generate relational, causal or not, links between AI outputs and human understanding.

Apart from trust and causality, other reasons listed for explainability, according to Vilone and Longo, are:

- “explain to justify - the decisions made by utilising an underlying model should be explained in order to increase their justifiability;

- explain to control - explanations should enhance the transparency of a model and its functioning … ;

- explain to improve - explanations should help scholars improve the accuracy and efficiency of their models;

- explain to discover - explanations should support the extraction of novel knowledge”

Closely related to explainability is an ethics’ discourse: the capacity for ethical interaction with AI often rests on the understandability of such systems, which in turn relies on these systems to be transparent and/or interpretable enough to be explainable.

However, some issues emerge in the process of defining explainability and transparency, as well as over-reliance on their connection to trust and ethics. First, their definitions and implementations often tend to be technical. Second, explanations might illuminate how the system generates inferences (such as by demonstrating which variables contribute most to the decision), however, they might not engage explanations of the broader social, political, environmental effects of such systems. Third, explainability and transparency design is often geared towards engineers themselves or direct users of the systems (as opposed to broader audience or those negatively affected) and relies heavily on natural language explanations and visualisations as the main modalities of communication, appealing to universal, disembodied reason as the main form of perception.

All this, we thought, calls for re-thinking explainability and its connection to transparency and ethics. To that end, we organised a two-day workshop “After Explainability: Metaphors and Materialisations Beyond Transparency”, which took place in Kassel and online on 17-18 June 2024. The workshop particularly sought to ask what kind of re-orientations can be produced from humanities and social sciences, especially in the face of other epistemic traditions, such as feminist, Black, post/de-colonial thought, new materialisms, critical posthumanism, and other critical theoretical perspectives.

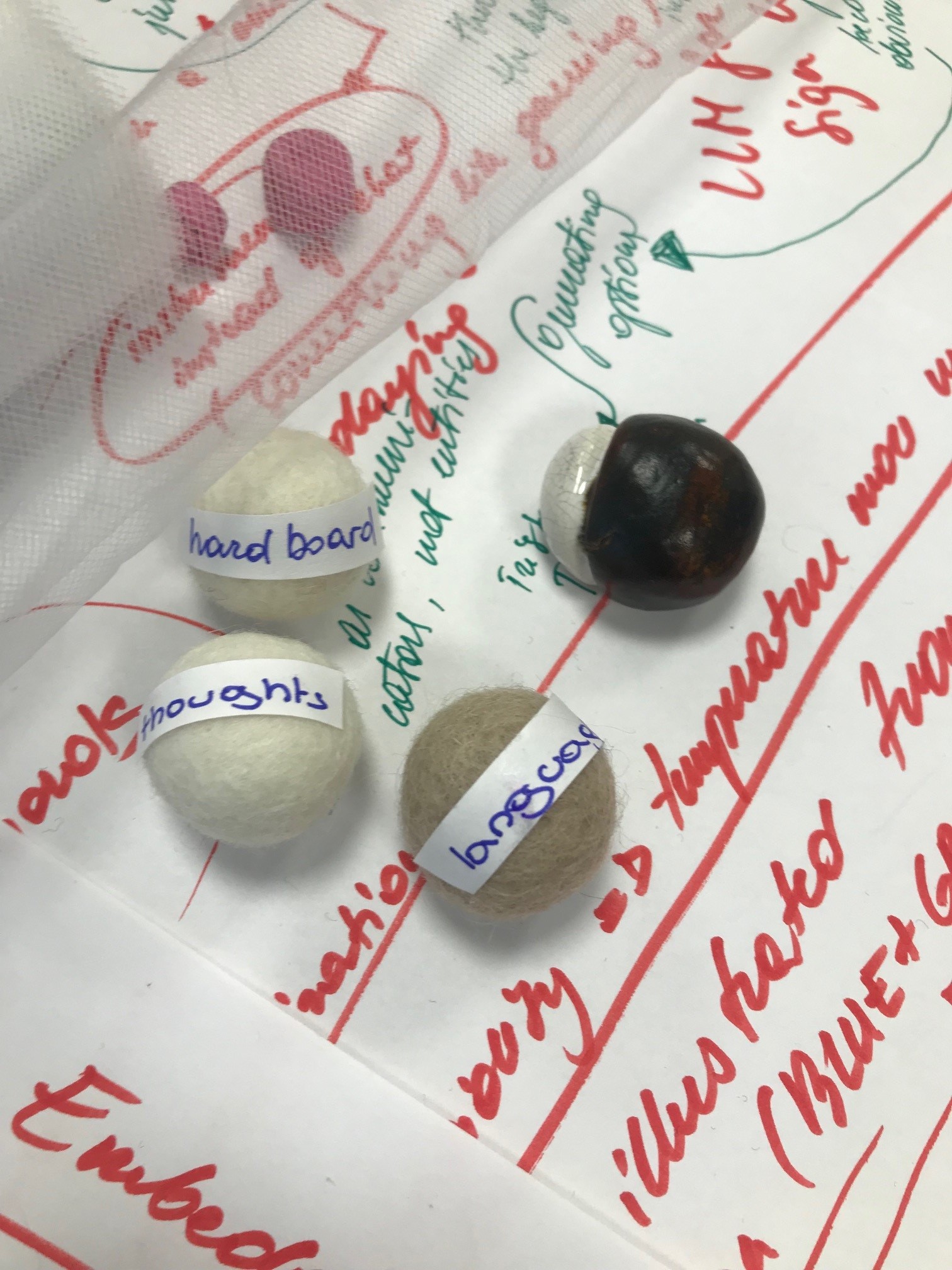

Over the two days we had seven presenters each offering a term for re-thinking, diffraction, critique or otherwise intervention into discourses of explainability and transparency, such as post-critical AI literacy (Eugenia Stamboliev); scales and flows, especially the various translations that are required across the scales of AI supply chain (Alex Taylor); accountability and its challenges in predictive AI in the humanitarian sector (Arif Kornweitz); felt ethics (Rachael Garrett); experiential heuristics (Goda Klumbytė and Dominik Schindler); the machinic imaginary, following the work of Castoriadis (Conrad Moriarty-Cole); and opacity, based on the work of Glissant (Nelly Y Pinkrah).

While many different questions emerged during the workshop, we would like to highlight a few themes that cut across nearly all presentations. First, it is the question of trust in machine learning and intelligent systems. As research shows, trust, while desirable, is not always an unquestionable good. There are cases, for instance, where trust is questionable due to histories of structural oppression and persecution, particularly of marginalised subjects using technology (such as surveillance of Blackness, as Simone Brown shows). In other cases, too much trust leads to an over-reliance on technological tools. Furthermore, (mis)trust in AI is also interrelated with (mis)trust in larger political, administrative, legislative and other institutions and their functioning. While AI tool in question can be reliable and trustworthy, its uses and its “owners” might not be. As one of the workshop attendees put it, “I think the point is more about trusting the corporations/business/scientists/govs behind. That’s why AI ethics is important to legislate, more than legislating AI as a technology”.

Similarly, accountability is also a concept that requires further nuance. In certain contexts, such as the humanitarian sector, as pointed out by Arif Kornweitz, there might not be sufficient procedures for accountability, nor for definitions of harm (does harm only come from personally identifiable information, or also from demographically identifiable information?) while the actors in the field might not be reliable to take accountability just on their own accord. As one of the commentators put it, “accountability is often about a holding to account of those with the power to design, build and implement AI systems (and infrastructure more generally). … We cannot expect an entity to take accountability if it is potentially against their own interest. In the case of AI systems, what the material interests are that drive the design and implementation is where we might see the breakdown of genuine accountability and obfuscation.”

This points to a larger context of AI and specifically the fact that when we talk about AI, we never talk only about AI: it is a matter of sociotechnical system. This complicates what we mean by explanation and ethics. Who do we explain to? For what purpose? And what exactly merits explanation? As Alex Taylor noted, “capitalist production relies on work in uncertain conditions being made certain through technical tools … When explaining is done, it is not to explain the problems but to render them in such a way that they become technical solutions”, thus pushing the translation and reduction of sociotechnical problems into technically manageable ones. How we do various translations, including choices of how to define, frame and measure a problem, how to chose optimization criteria, and how we apply knowledges from different domains, matter greatly and in the end affect the overall ethical and explanation landscapes that get instituted alongside a system.

As emerging from the general discussion, putting AI in its sociotechnical context can be done by reframing the conceptualization of AI as an infrastructure, not a technology. Thereby, as Arif Kornweitz stated, the discussion moves away “from an ‘intelligence’ discussion to a ‘in-service-of’ discussion”, opening opportunities for explainability in community contexts. Continuing this train of thought, user participation in explainability serves not merely to operationalize explainability and the assessment of understanding, but as ‘putting people around the table’ to design and decide how explainability is implemented. In questions of power, Nelly Y Pinkrah adds, that it is equally important who decides who the people are sitting at the respective table.

Some of those landscapes can be intervened in and reconfigured by using different starting points. For instance, Eugenia Stamboliev highlighted the need for post-critical approach to literacy as a capacity to foster not only critique (being against something) but also find ways towards generative creativity. Rachael Garrett pointed out that starting from somaesthetic approach in design that prioritizes the body and embodiment can help re-think ethics as a process that is felt and embodied, too. Practicing ethics, then becomes a matter of care that needs to be performed analytically, pragmatically and practically. Furthermore, as Goda Klumbytė and Dominik Schindler showed, even on a rather abstract level of computational and explanation heuristics, situated knowledges and embodied transcorporeal experience can be important sources of more accountable AI/ML systems that are rooted in critical reflexive politics of location.

Another important point that emerged was regarding the overall condition of the machinic and the social. This was discussed on a rather high level of philosophical abstraction (not unlike high levels of computational or mathematical abstraction). What do technologies such as intelligent machines do to the operation of the social and social imaginary as such? One way to see them, as Conrad Moriarty-Cole did, is as completely alien forms of intelligence that inject their modes of operation into the social. The point here would be to look for forms of responsivity as a dialogical, expressive and open-ended act that does not depend on transparency or full intelligibility. Or, as Nelly Y Pinkrah noted drawing on Edouard Glissant’s work, look for operations of opacity and investigate closely who, what, and how is made opaque in the machinic realm, and how these operations of opacity are ambivalent – in some cases, they provide possibilities for liberation and resistance (as Glissant’s famous “right to opacity”), while in others they obfuscate and conceal what should be made visible. Thus opacity/transparency, even in machinic realm, have to be thought together as a dynamic where critique becomes possible.

Forms of critique and creativity were also discussed during the two days of the workshop. Is creativity with critique possible? Or is the role of critique simply that – to point out the domains of power that need attention? What other forms of engagement with AI, beyond critique but also straightforward acceptance, are there? As one participant pointed out, historian Saidiya Hartman addresses this beautifully with the concept of “critical fabulations”: “I intended both to tell an impossible story and to amplify the impossibility of its telling.“ “Is it fair to say, – asked our participant, – that when confronted with the impossible then all we have left is stories? Can we imagine stories of other ways to live with AI? That aren’t so all encompassing and seek omnipresence?”

We hope that this will provide plenty of inspiration for further research and generative interventions into the more traditional debates and discourses around explainability and ethics in AI.

*The workshop “After Explainability: AI Metaphors and Materialisations Beyond Transparency” has been co-hosted by the DFG-Network “Media, Gender, and Affect” and Participatory IT Design department, as part of the “AI Forensics” project. The event thus was made possible with the support of the German Research Foundation (DFG) and Volkswagen Foundation.