Explainable artificial intelligence (XAI) as a design perspective towards explainable AI can benefit from feminist perspectives. This post explores some dimensions of feminist approaches to explainability and human-centred explainable AI.

Author: Goda Klumbyte

critML: Critical Tools for Machine Learning that Bring Together Intersectional Feminist Scholarship and Systems Design

Critical Tools for Machine Learning or CritML is a project that brings together critical intersectional feminist theory and machine learning systems design. The goal of the project is to provide ways to work with critical theoretical concepts that are rooted in intersectional feminist, anti-racist, post/de-colonial […]

Call for Participation: Critical Tools for Machine Learning

Join a workshop “Critical Tools for Machine Learning” as part of CHItaly conference on July 11, 2021.

Join us in reading Jackson’s “Becoming Human: Matter and Meaning in an Antiblack World”

In what ways has animality, humanity and race been co-constitutive of each other? How do our understandings of being and materiality normalize humanity as white, and where does that leave the humanity of people of color? How can alternative conceptualizations of being human be found […]

Epistemic justice # under (co)construction #

This is the final part of a blogpost series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it as well as what is a […]

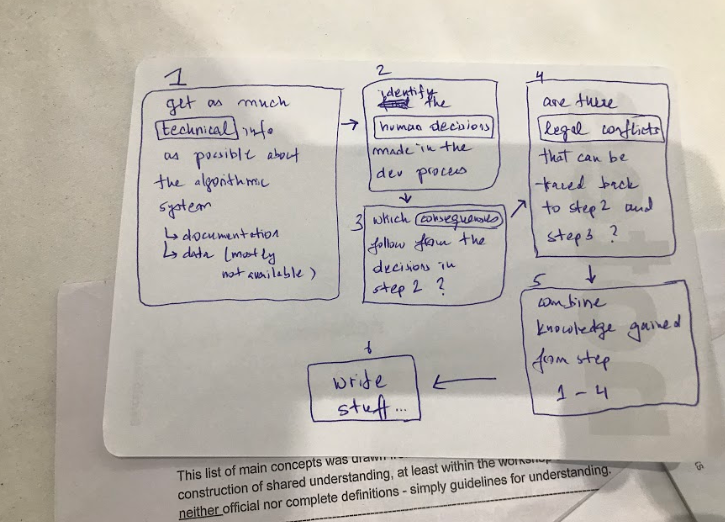

Experimenting with flows of work: how to create modes of working towards epistemic justice?

This is part three of a blog post series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it as well as what is a […]

Finding common ground: charting workflows

This is part two of a blogpost series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it, read our first article here. This post […]

Reading group Spring session: Ruha Benjamin’s “Race After Technology”

Are robots racist? Is visibility a trap? Are technofixes viable? What is social justice in the high-tech world? These questions are raised in Ruha Benjamin’s book Race After Technology (2019, Polity Press) that we will read during our TBD reading group spring session 2020. Race […]

TBD research day on xenofeminism

Over the past months the TBD Reading Group, hosted by GeDIS and Sociology of Diversity at the University of Kassel, read Laboria Cubonik’s “Xenofeminst Manifesto” (2015) and Helen Hester’s follow-up monograph “Xenofeminism” (2018). Xenofeminism links technomaterialist, anti-naturalist and gender abolitionist perspectives on processes of bodily […]

Lost in translation? Invitation to address the challenges of interdisciplinary cooperation in the FAT community

This post was originally published on Medium on 11 December 2019 and was written by Aviva de Groot, Danny Lämmerhirt, Evelyn Wan, Goda Klumbyte, Mara Paun, Phillip Lücking, and Shazade Jameson Introduction: The short of it The rapid deployment of complex computational, data-intense infrastructures profoundly […]