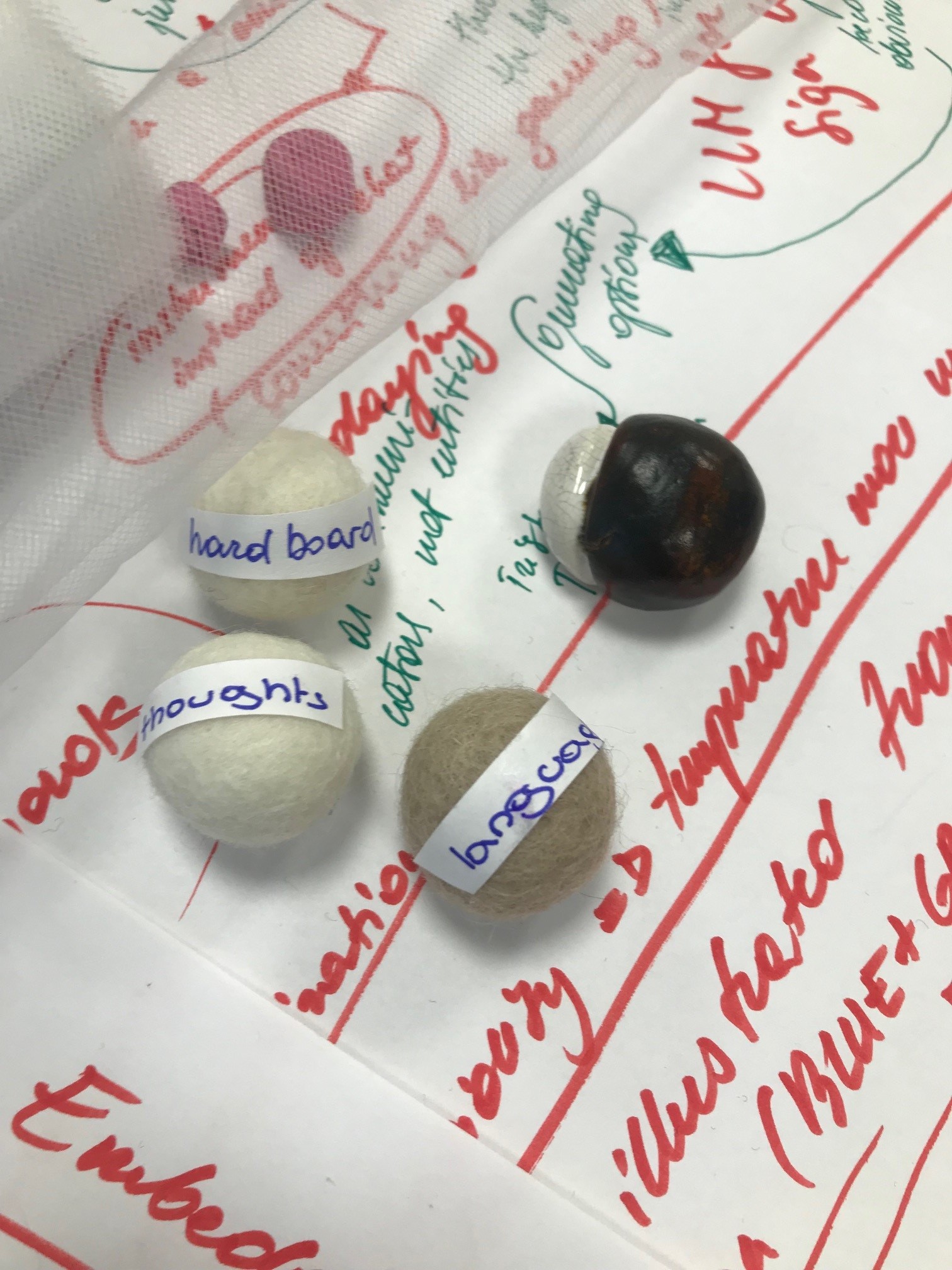

Tangible and embodied interactions – can they offer something for the way users understand and engage large language models (LLMs)? This is the question that we explored in studio (a.k.a. hands-on workshop) titled “Tangible LLMs: Tangible Sense-Making for Trustworthy Large Language Models” that PIT, namely […]

Author: Goda Klumbytė

Feeling AI: ways of knowing beyond the rationalist perspective

Felt AI is a collaborative project between myself (Goda Klumbytė), design researcher Daniela K. Rosner and artist Mika Satomi. Throughout the last year and a half, the three of us have been meeting regularly to probe, discuss and research together how can one feel AI. […]

Algorithms + Slimes: Learning with Slime Molds

In summer 2024, Goda Klumbytė and artist Ren Loren Britton explored algorithms and slimes in a joint residency at RUPERT, Centre for Art, Residencies, and Education in Vilnius, Lithuania. These are some brief notes on what we learned and how we sought non-extractive ways of […]

After Explainability: Directions for Rethinking Current Debates on Ethics, Explainability, and Transparency

EU guidelines on trustworthy AI posits that one of the key aspects of creating AI systems is accountability, the routes to which lead through, among other things, explainability and transparency of AI systems. While working on AI Forensics project, which positions accountability as a matter […]

Bayesian Knowledge: Situated and Pluriversal Perspectives

November 9 & 10, 2023 09:00–12:30 BST / 10:00–13:30 CET / 04:00–07:30 ET / 20:00–23:30 AEDT Hybrid workshop (online + at Goldsmiths, London, UK) This workshop examines potential conceptual and practical correlations between Bayesian approaches, in statistics, data science, mathematics and other fields, and feminist […]

Feminist XAI: From centering “the human” to centering marginalized communities

Explainable artificial intelligence (XAI) as a design perspective towards explainable AI can benefit from feminist perspectives. This post explores some dimensions of feminist approaches to explainability and human-centred explainable AI.

critML: Critical Tools for Machine Learning that Bring Together Intersectional Feminist Scholarship and Systems Design

Critical Tools for Machine Learning or CritML is a project that brings together critical intersectional feminist theory and machine learning systems design. The goal of the project is to provide ways to work with critical theoretical concepts that are rooted in intersectional feminist, anti-racist, post/de-colonial […]

Call for Participation: Critical Tools for Machine Learning

Join a workshop “Critical Tools for Machine Learning” as part of CHItaly conference on July 11, 2021.

Join us in reading Jackson’s “Becoming Human: Matter and Meaning in an Antiblack World”

In what ways has animality, humanity and race been co-constitutive of each other? How do our understandings of being and materiality normalize humanity as white, and where does that leave the humanity of people of color? How can alternative conceptualizations of being human be found […]

Epistemic justice # under (co)construction #

This is the final part of a blogpost series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it as well as what is a […]