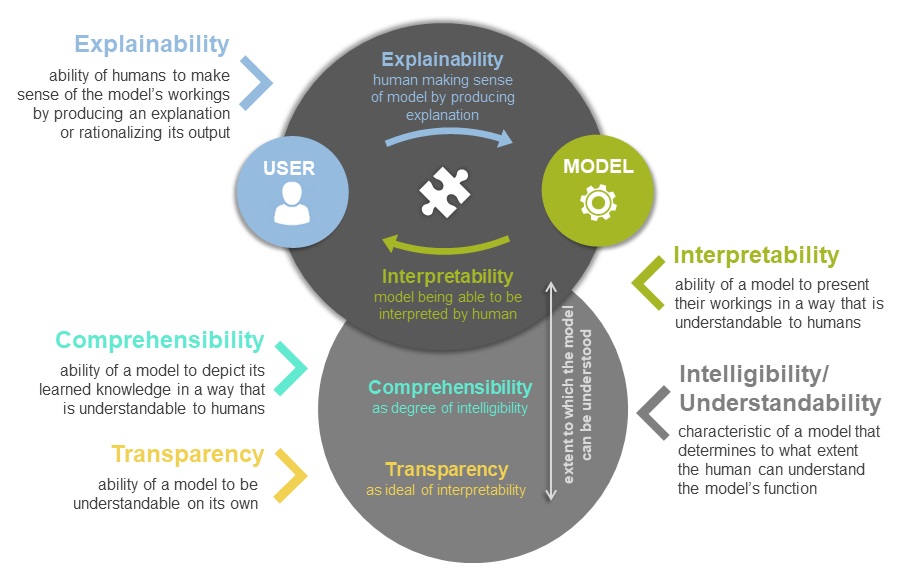

With artificial intelligence taking up more space in our daily lives and algorithms becoming more and more complicated, the concept of explainable artificial intelligence is gaining popularity as it is inevitably linked to notions of comprehension of the system. Explainable artificial intelligence (XAI) aims at enabling human understanding of algorithmic workings, especially algorithmic output behavior[i]. This means that humans are trying to understand how the algorithmic model has reached its decision, which can be done by including the aim of explainability in the design process (ante-hoc explanations) or trying to explain how the model works later on (post-hoc explanations). Working with XAI always entails asking about whom the explanation is intended for, as designers, users or other stakeholders often have different levels of expertise and motives[ii].

A couple of years ago, a new term has been popularized in the field of XAI: Human-centered explainable AI (HCXAI) tries to center the human by examining human-AI cooperation from the human’s viewpoint. Focusing on values and “the socially situated nature of AI” (Ehsan & Riedl 2020, p.1)[iii], this field tries to account for fairness, accountability and transparency of sociotechnical systems by moving the output of the system closer to human understanding[iv]. This poses the question if moving the machine output closer to human rationale of the system is the only option in dealing with different explanatory modalities or if other, for example feminist epistemological viewpoints could provide a different method for XAI.

Besides very few contributions, feminist perspectives have not yet been broadly applied to XAI. However, few papers provide a ground to build upon for establishing an explicitly feminist epistemological XAI position[v]. Making use of feminist epistemologies, such as the concept of situated knowledges, standpoint theory and intersectionality, opens up a social justice-oriented way of dealing with XAI. With these epistemological perspectives in mind, we present a number of feminist principles for XAI, which in turn offer concrete implications for XAI design. The research direction we aim to introduce is still rather conceptual and has not been applied to any XAI systems yet. There are multiple ways to expand, concretize and add to this framework, as well as possibilities of application and collaboration.

Feminist principles for XAI:

- Normative orientation towards social justice and equity byforegrounding XAI design that contributes to justice and equity, making XAI inextricably linked to fairness, accountability, transparency and ethics (FATE) and directing AI systems and explanatory efforts at active erosion of inequalities.

- Attention to power and structural inequalities by researching, understanding and accounting for the ways that concepts pertaining to explainability and explanation design are entangled with power dynamics in specific sociotechnical contexts.

- Challenging universalist and traditional rationalist modalities of explanation by endorsing plurality of perspectives and breaking with an idea of universal, culturally specific Western rationality to expand what is meant by explanation and in what ways it can be delivered.

- Centering marginalized perspectives by encouraging participatory and inclusive processes in XAI design, fostering interdisciplinarity, and actively pursuing XAI that benefits and empowers marginalized stakeholders to critically interpret, evaluate and, importantly, respond towards AI systems.

For XAI design to be intersectional feminist, it must therefore include…

… context-oriented inquiry of the goals, means and ends of XAI design.

… attention to the systemic level to understand its context and the broader rationale of the specific AI system.

… proactivity in its goals of focusing on intersectional perspectives.

… participatory and interactive explanation design to understand and account for marginalized perspectives and foster critical response-ability.

… intersectionality in the understanding of different stakeholders and user groups.

… an expansion of explanatory modalities by experimenting with the ways explanations are delivered beyond more traditional rational-cognitive.

For a more detailed discussion of the above-mentioned principles and implications, as well as an introduction of the method of cartography to account for invisible labour in the AI ecosystem, you can check out these our CHI 2023 workshop papers on these topics here:

Goda Klumbytė, Hannah Piehl, and Claude Draude. 2023. Towards Feminist Intersectional XAI: From Explainability to Response-Ability. Workshop: Human-Centered Explainable AI (HCXAI), Conference on Human Factors in Computing Systems CHI ’23, April 23–28, 2023, Hamburg, Germany, 9 pages.

Goda Klumbytė, Hannah Piehl, and Claude Draude. 2023. Explaining the ghosts: Feminist intersectional XAI and cartography as methods to account for invisible labour. Workshop: Behind the Scenes of Automation: Ghostly Care-Work, Maintenance, and Interference, Conference on Human Factors in Computing Systems CHI ’23, April 23–28, 2023, Hamburg, Germany, 6 pages.

[i] Arrieta, A. B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., Garcia, S., Gil-Lopez, S., Molina, D., Benjamins, R., Chatila, R., & Herrera, F. (2020). Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58, 82–115. https://doi.org/10.1016/j.inffus.2019.12.012

[ii] Liao, Q. V., & Varshney, K. R. (2021). Human-Centered Explainable AI (XAI): From Algorithms to User Experiences. https://arxiv.org/pdf/2110.10790

[iii] Ehsan, U., & Riedl, M. O. (2020). Human-Centered Explainable AI: Towards a Reflective Sociotechnical Approach. In C. Stephanidis, M. Kurosu, H. Degen, & L. Reinerman-Jones (Eds.), HCI International 2020 – Late Breaking Papers: Multimodality and Intelligence (pp. 449-466). Springer, Cham. https://doi.org/10.1007/978-3-030-60117-1_33

[iv] Ehsan, U., Wintersberger, P., Liao, Q. V., Watkins, E. A., Manger, C., Daumé III, H., Riener, A., & Riedl, M. O. (2022). Human-Centered Explainable AI (HCXAI): Beyond Opening the Black-Box of AI. In S. Barbosa (Ed.), ACM Digital Library, CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1–7). Association for Computing Machinery. https://doi.org/10.1145/3491101.3503727

[v] Hancox-Li, L., & Kumar, I. E. (2021). Epistemic values in feature importance methods: Lessons from feminist epistemology. In Association for Computing Machinery (Ed.), ACM Digital Library, Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (pp. 817–826). Association for Computing Machinery. https://doi.org/10.1145/3442188.3445943

Huang, L. T., Chen, H., Lin, Y., Huang, T., & Hung, T. (2022). Ameliorating Algorithmic Bias, or Why Explainable AI Needs Feminist Philosophy. Feminist Philosophy Quarterly, 8(3). https://ojs.lib.uwo.ca/index.php/fpq/article/view/14347

Kopecká, H. (2021). Explainable Artificial Intelligence: Human-centered Perspective. WomENcourage, 22-24 September 2021, Prague.

Singh, D., Slupczynski, M., Pillai, A. G., & Pandian, V. P. S. (2022). Grounding Explainability Within the Context of Global South in XAI. CHI 2022 Workshop on Human-Centered Explainable AI (HCXAI). https://arxiv.org/pdf/2205.06919