Tangible and embodied interactions – can they offer something for the way users understand and engage large language models (LLMs)? This is the question that we explored in studio (a.k.a. hands-on workshop) titled “Tangible LLMs: Tangible Sense-Making for Trustworthy Large Language Models” that PIT, namely Goda Klumbytė and Claude Draude, co-organised with Maxime Daniel and Nadine Couture (ESTIA Institute of Technology) and Leonardo Angelini, Elena Mugellini and Beat Wolf (University of Applied Sciences and Arts Western Switzerland). The studio took place during the ACM conference on Tangible Embodied Interactions (TEI) in Bordeaux, France, on 4-7 March 2025.

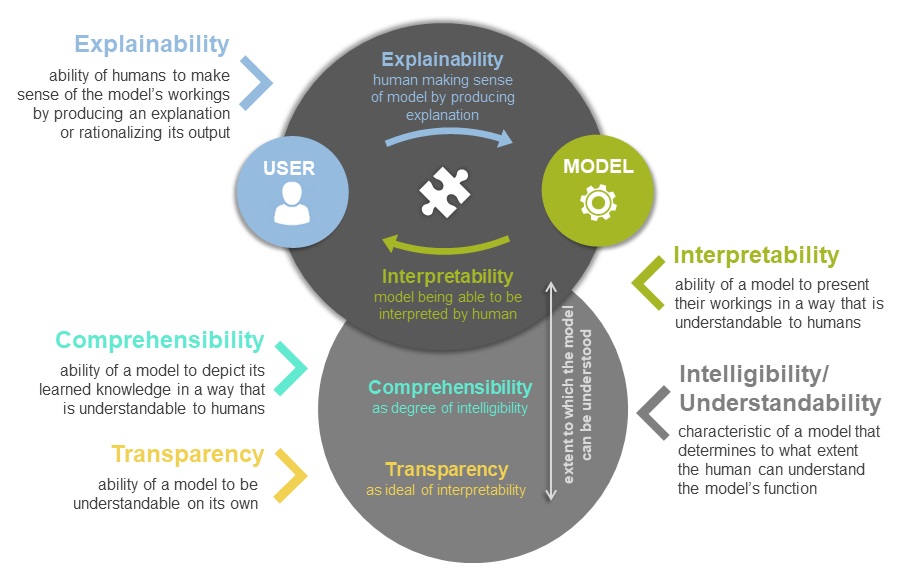

The goal of the studio was to explore how tangible and embodied interaction modalities can help better grasp and make sense of transformer-based AI models function (read the full studio paper for more detailed description). We started the workshop with some basic input on what LLMs are and how they work (expertly delivered by Elena and Beat), including specific core concepts such as input/output embedding, positional encoding and latent space, attention and temperature. Using card sets for LLM core concepts and functions, and another card set for tangible and embodied interaction modalities, we then brainstormed in groups what kind of modalities can help better explain specific concepts or functions of LLMs. In the end the groups also built some paper prototypes based on these ideas, with different prototypes addressing different issues or dimensions of understandability and/or meaningful and trustworthy interaction.

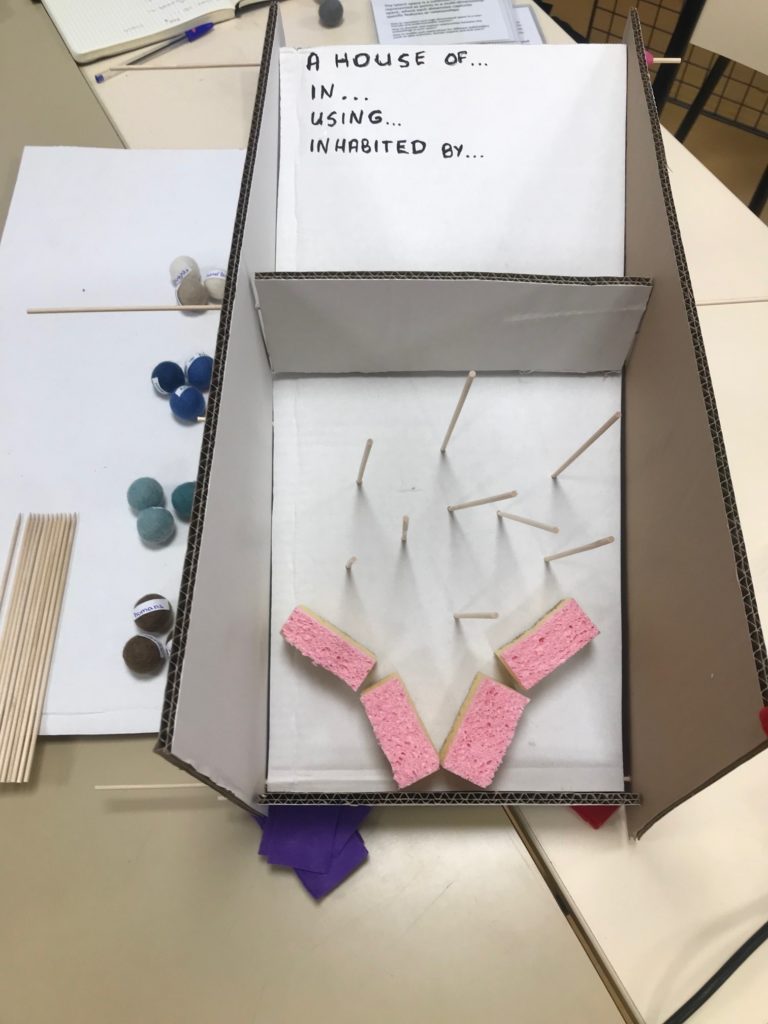

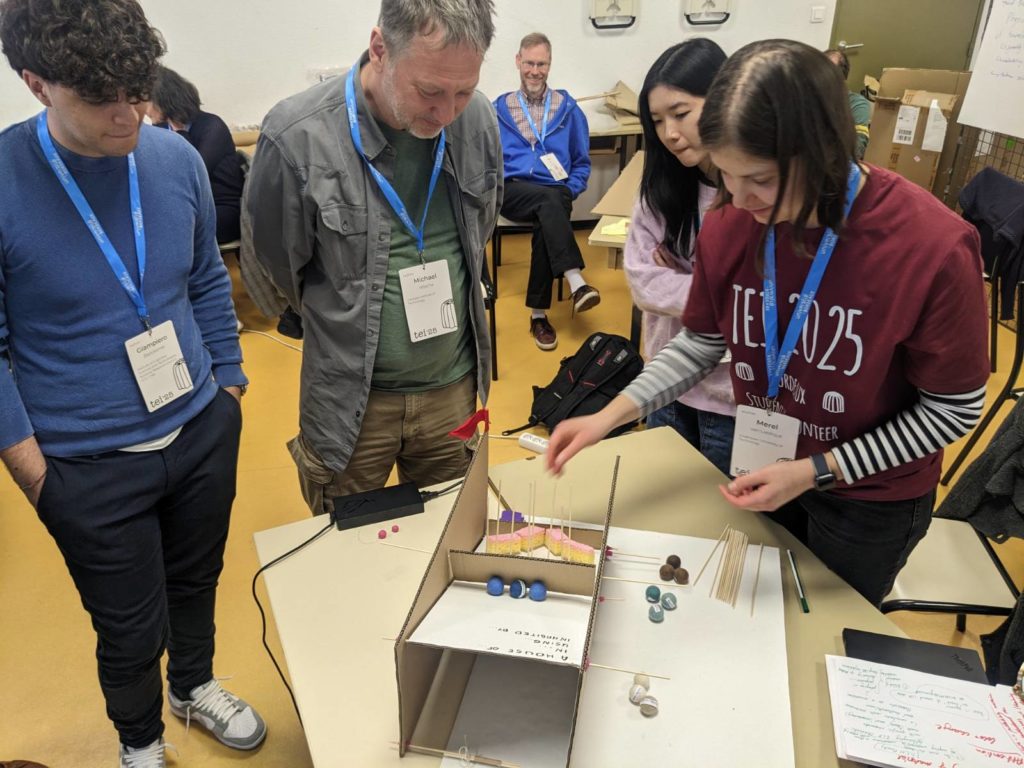

For example, the “LLM poetry pinball machine” prototype sought to address the specific issue of LLMs often being treated as replacement search engines, instead of language generators. This can lead to issues such as over-reliance and unwarranted trust in the truthfulness of LLM-generated statements. To highlight LLM’s probabilistic nature and showcase it not as a “truth machine” but rather as a “probability machine”, the LLM Pinball prototype showed how the prediction of next word, represented by the ball, is influenced by the set temperature, represented by the number and placement of the wooden pins. The fewer pins – the lower the temperature, the more likely it is for the middle ball is to reach the bottom first, which represents more conservative word choice. The more pins – the higher the temperature, and the more randomized the word selection. To demonstrate this, the group used a template of poetry generation created by artist Alison Knowles, in association with James Tenney at CalArts in 1967, which was written for the early programming language Fortran. Knowles suggested that computer could generate poetry using a specific formula:

a house of (list material)

in (list location)

using (list light source)

inhabited by (list inhabitants)

which the group used as an example in this LLM poetry pinball machine.

Others highlight yet again different dimensions of LLMs and their tangibility. For instance, one prototype focussed on giving user more agency in the interaction with LLMs via a kind of hand controller, which would pulse or squeeze the user’s hand when LLM is uncertain about which word to pick next. The user’s response of squeezing tighter or loosening the grip on the bracelet-like controller would then nudge the LLM into either more conservative or more random word selection. Yet another prototype from the same group focussed on spatialising the latent space and exploring embeddings in a collaborative manner, by allowing the users to pick words in VR with a kind of stick, and collaboratively explore their links.

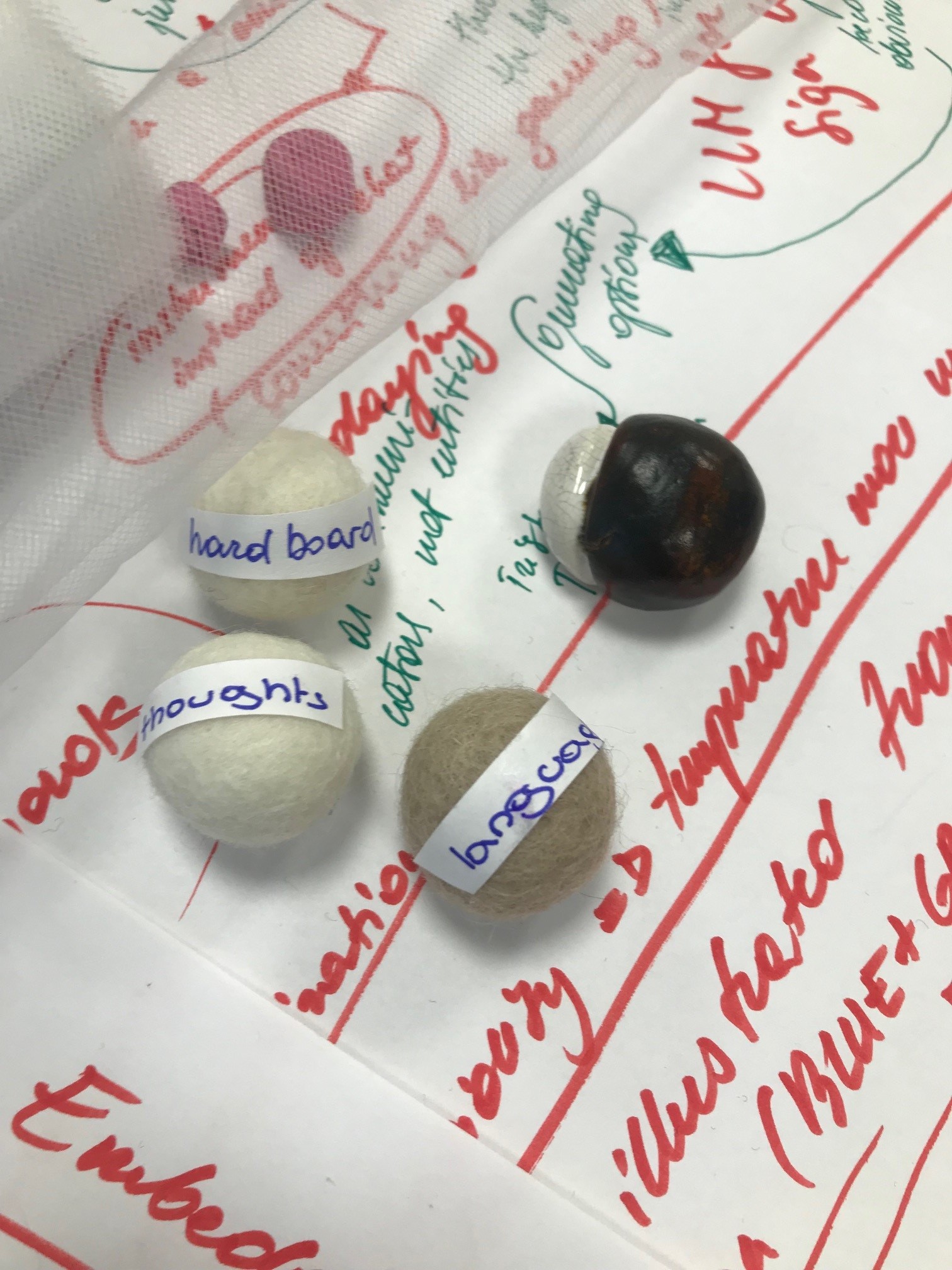

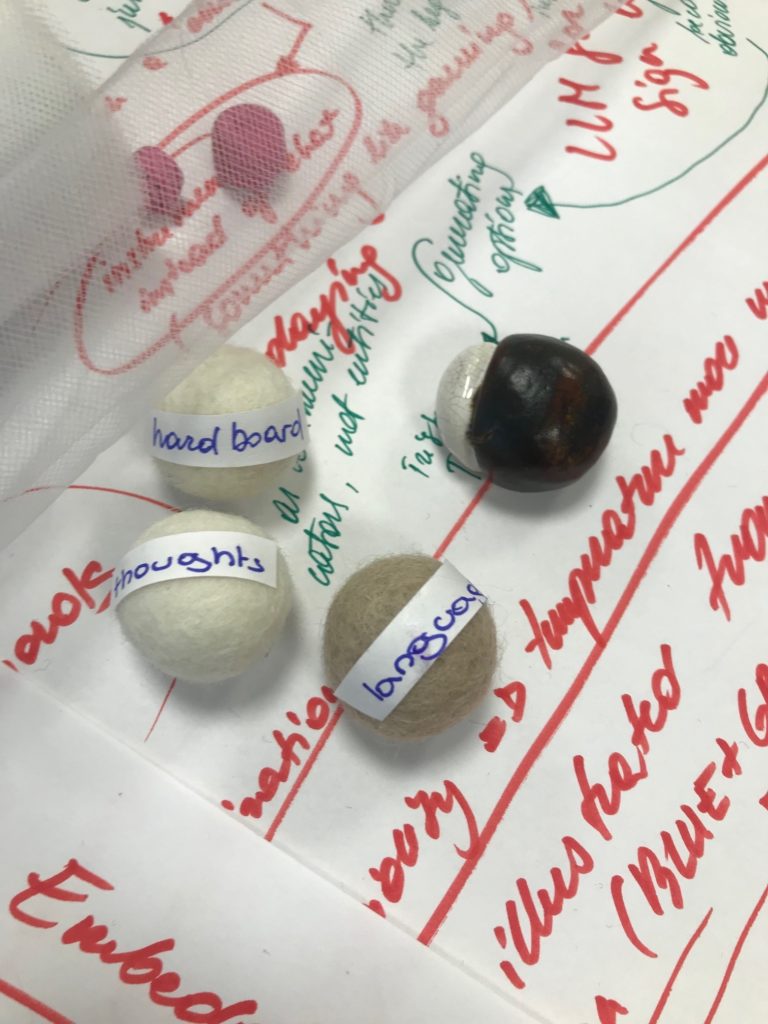

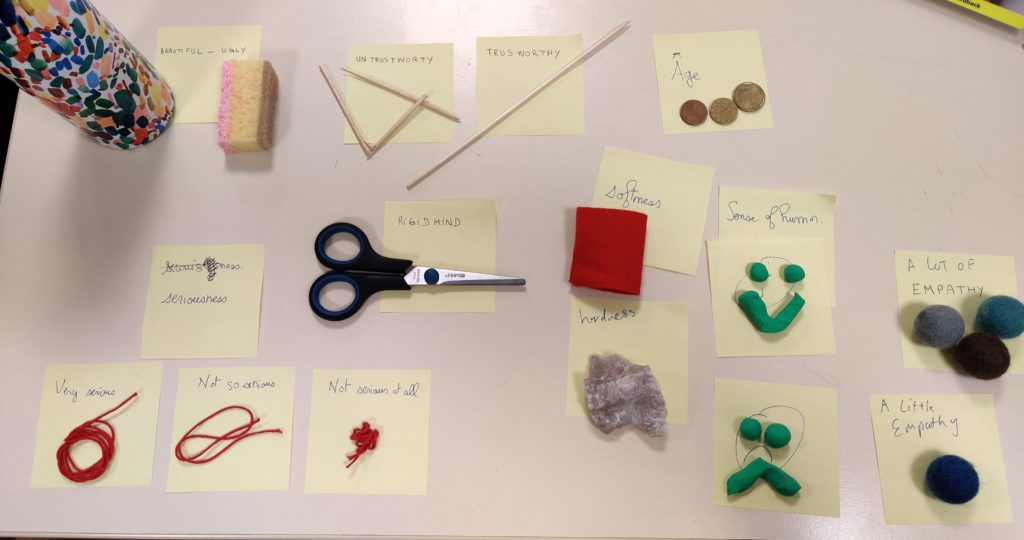

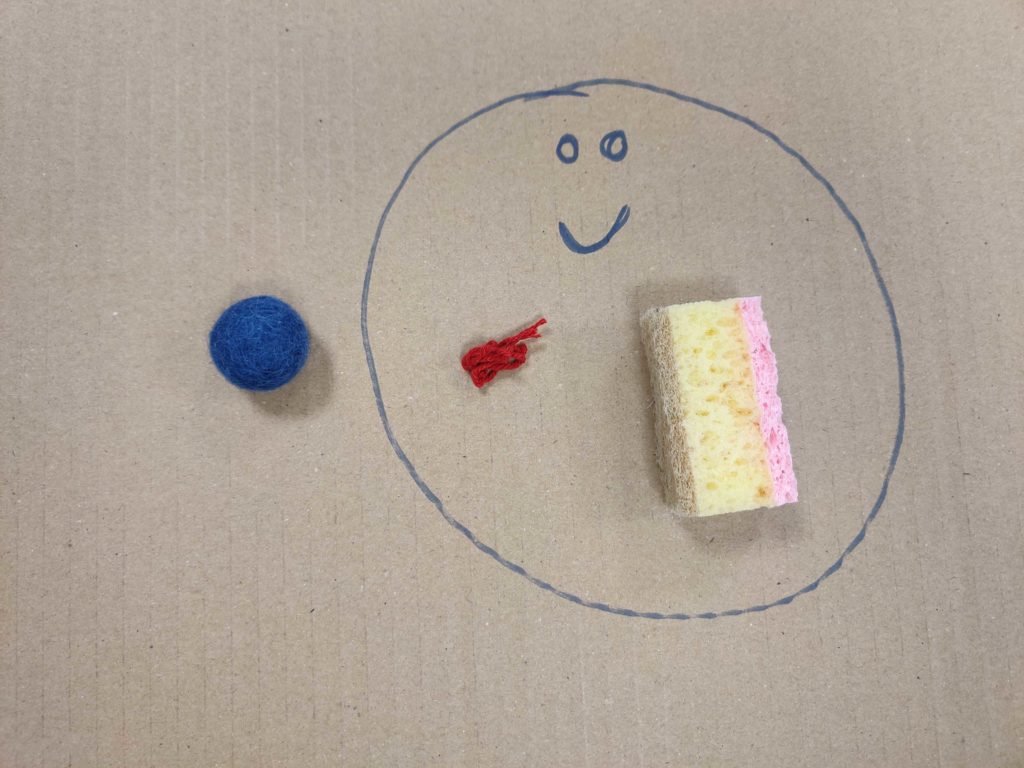

The last prototype called “LLM body” focused on giving a body to an LLM and exploring how this body might invoke different emotions or affective qualities and therefore impact the setting of interaction. The idea was to map materials to a certain kind of affective vocabulary, and in that way, by creating this kind of LLM body, change the character of LLM and how it interacts with the user. For instance, one of the prompts to change LLM character with a specific body was phrased in the following way: “Create an image of a whimsical, abstract creature composed of: a colorful, patterned water bottle as the core for vibrance; a piece of rock for resilience; a green clay smiley face for humor; blue felt balls for a touch of empathy; red thread signifying balanced seriousness; broken sticks symbolizing unpredictability; and a coin representing wisdom through age. The setting is a playful, imaginative world that reflects a blend of structure and spontaneity.”

Overall, this was the first attempt to explore tangible and embodied interactions with LLMs towards their understandability, and as such it was quite successful. We developed some prototypes, exchanged some ideas and, what is also important, learned more about LLMs. This perhaps is the main takeaway, at least for our PIT team: that understanding is not only something that one acquires by reading or observing explanations – explanation and (perhaps even more profound) understanding emerges also in building physical representations of LLMs and their operations, or even just their specific parts. Much like what Goda Klumbytė, Daniela K. Rosner and Mika Satomi realised during the Felt AI project, understanding and knowing can and often does emerge in interaction and engagement.

We hope we will have the chance to do some further collaborative work with our colleagues form France and Switzerland, particularly towards exploring specific dimensions of LLM and how they can be tangibly interacted with or expressed, and the moments where tangibility and embodiment calls to attention ethical concerns in LLM design and use. Thus: to be continued!