This is part two of a blogpost series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it, read our first article here. This post reflects on the workshop method. Written by Danny Lämmerhirst, Aviva de Groot, Goda Klumybte, Phillip Lücking and Evelyn Wan.

How can computer scientists, data scientists, as well as scholars from humanities and social sciences hear one another, acknowledge and appreciate each other’s ways of reasoning about algorithms? If our goal is to strive for epistemic justice – that is to improve our capacities for fair and inclusive knowledge making, – what form could a workshop take to respond to this?

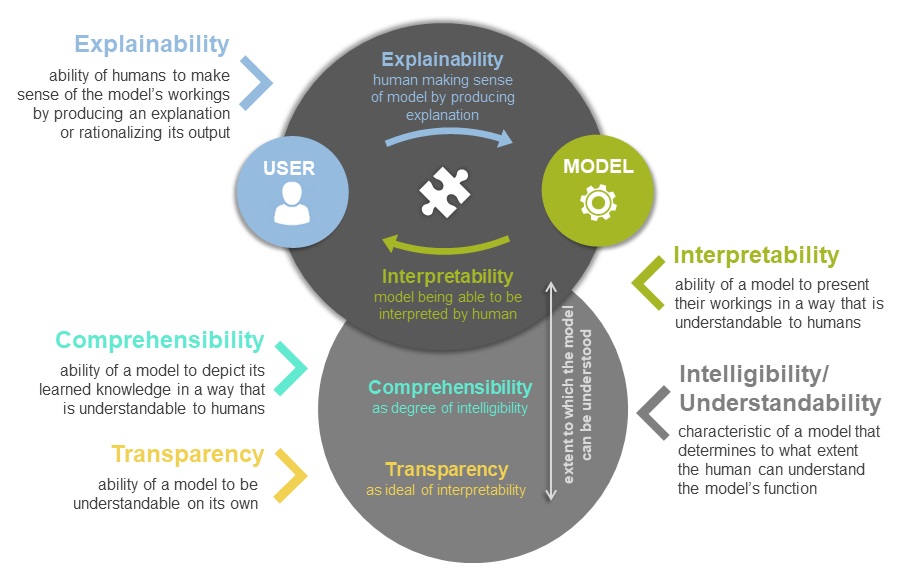

To address these questions, our FAccT (formerly known as FAT*) 2020 workshop revolved around two main parts, “Charting methodological workflows” and “Translation Cartographies”. We formed four groups in the beginning consisting of participants with diverse disciplinary backgrounds. Each group was informed about facilitator guidelines that address respectful participation, and diversity in voices, promoting awareness as these aims easily (and unintentionally) suffer from group dynamics and knowledge production suffers as a consequence. We also offered a shared glossary – brief descriptions of key concepts that acted as a common reference point that people were encouraged to add their own takes (and lemma’s) to. In this post we will discuss some insights that came up during the first part of charting the workflows.

We started off with participants presenting and discussing their discipline’s usual workflows among themselves. By “workflow” we mean a formalized way of building, approaching or analyzing a particular object (subject of investigation). The term itself is more frequently associated with industrial and organisational fields, and often scholars from the humanities and social sciences would not think about the way they do their work as adhering to a “workflow”. Nonetheless, a method, a specific approach, or a research routine can be regarded as a workflow. In computing, for example, design methods, practices of writing, editing, filing and distributing software products can be seen as workflows, too. We deliberately played with this concept to highlight that we all, regardless of the discipline, do have “formats” that we adhere to in our work and especially where these are tacit we need to bring them to the fore in order to open ourselves up to reflection and critique.

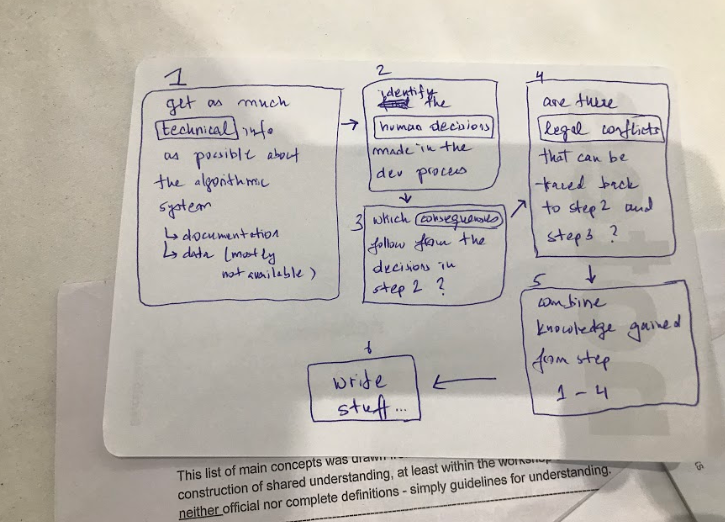

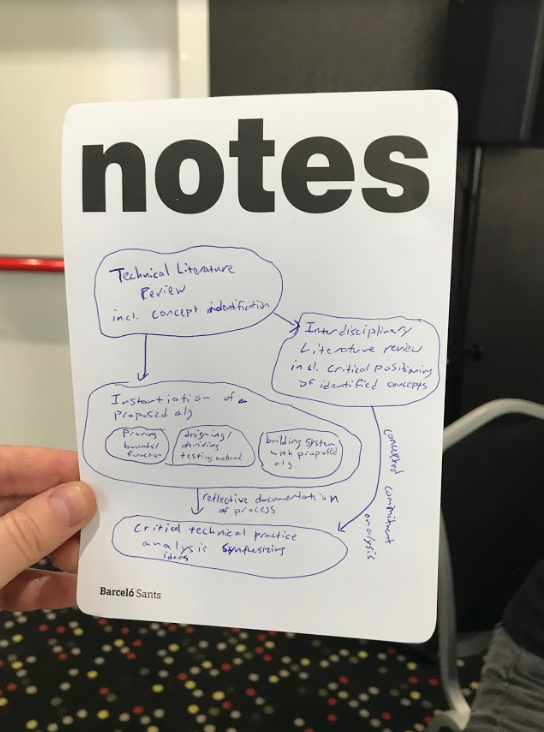

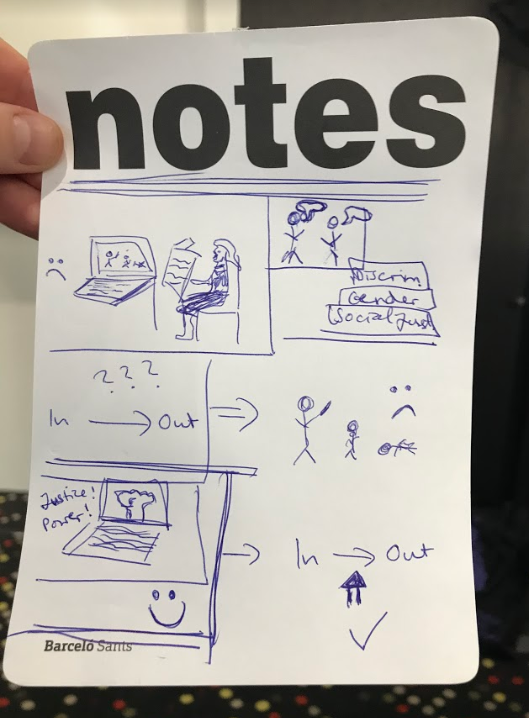

Above: Some sample workflows from different disciplines

This was for us a way to invite everyone to summarise their discipline’s workflow in any representation they like (a graphic, a comic, a text), and to share that amongst each other, as an alternative “self-intro”. It was an essential part for building common ground in such a short time – understanding the way the other works, the kind of questions different people zoom in on or bring into attention. It offered the possibility to connect with one another, despite everyone having very different jobs and output formats in their lines of work. For example, one participant working in policy first-hand observed the iterative cycles that take place also in coding. The question then became, how to build upon these first conversations and proceed from there.

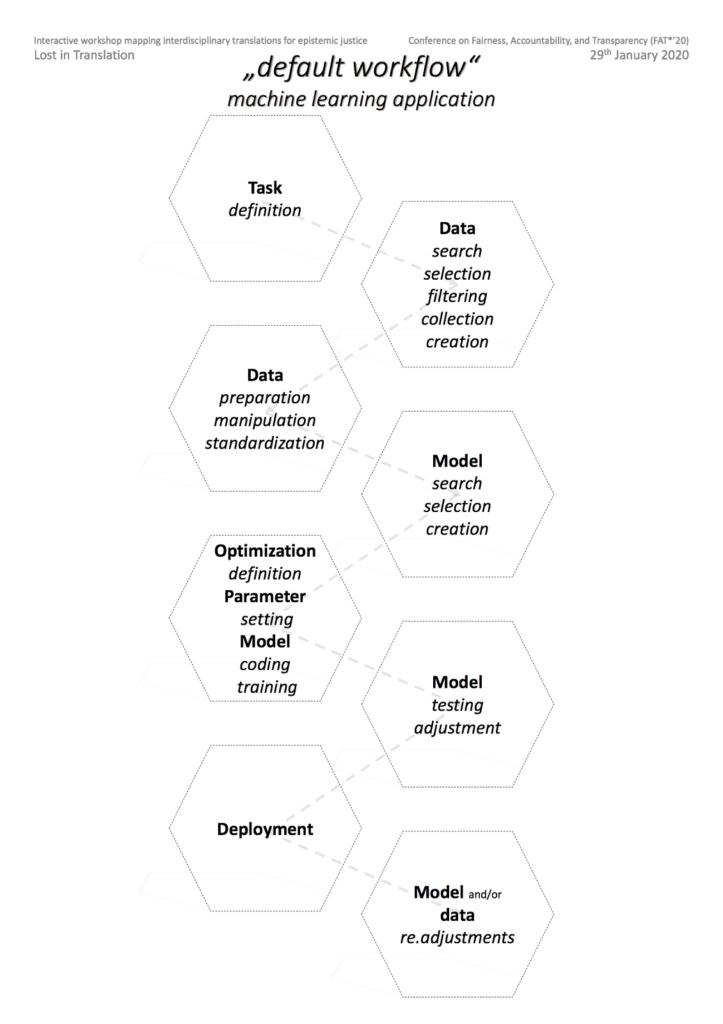

After the groups shared their workflows , we presented a “default” technical workflow for building an algorithmic system. This served as a blueprint and a basis to start the debate about how we could design workflows otherwise. In other words, the second part of the exercise was to start debating on how this “prototypical” technical workflow could be changed towards ideals of epistemic justice. Drawing from their own experience with algorithmic systems and on their own discipline-specific perspectives, the participants in various groups annotated, drew on and redrew the technical workflow in order to make it more interdisciplinary and more oriented towards epistemic justice (we will share examples and more details of the resulting workflows in the next post).

Shared knowledge space requires shared objects upon which ideas and assumptions can be worked on and tested against: concrete examples, real world applications, case studies. For our workshop, we picked the prototypical machine learning design workflow as our shared object that the different groups tackled in various ways. As predicted, the workflow was quickly understood as being far too simplistic, but the responses were different: some groups rejected it all together while some groups had difficulty deviating from it, for example. How to represent the rich and messy realities of people’s different practices on paper? We were interested in the kind of examples and personal experiences that would come to inform the changes participants made to the standard workflow before them, and asked them to pay particular attention to instances of ‘conflict’ or difficulties.

Some chose to abandon the presented model immediately, using it merely as a springboard for discussion. Some noticed how quickly from modelling the workflow they go to “modelling the world”, how trying to capture all dynamics possible would render the model humongous and useless. In one group, participants decided to use a case study to test their ideas. Immediately, the case changed the model, showing how powerful a clear case can be for influencing algorithmic design, and for establishing common ground. Perhaps a case of scientifically produced knowledge that was uncovered as bad science could be used to drive the discussion. Critical theory is rife with examples, and the workshop setting could perhaps try to tease out to what extent ‘workflows’ (in our broad understanding) were at play in allowing wrongful authority to be established. These interactions bring out the broader questions our workshop attempted to tackle: to what extent can one plan epistemically just collaboration from an abstract workflow? Are general models of working together even useful, or should such models always be case-specific? Can interdisciplinarity be formalized into a method, or should it adhere in more flexible ways to predispositions, normative concepts such as epistemic responsibility and justice?

Technical sciences tend to be familiar with working with abstract workflow models, and management studies offer multiple flowcharts detailing effective process management. Methods-turned-adjectives-turned-models such as “agile”, “lean” and others offer ways to steer processes of work without burdening them with too rigid of a structure. However, one of the biggest advantages of interdisciplinary work could exactly be the non-smoothness – the necessary pauses in the process in order to explain concepts, to address concerns and to find collaborative forms of research. It calls for sensibilities to pay attention to epistemic, social and political power dynamics that are inevitably alive in knowledge making. Further, the value that the disciplines within social sciences and the humanities have to offer also doesn’t come from formalized work processes but from their interpretative power and ability to examine context, history, background, positionality, which are then case-specific.

Rather than nailing down how interdisciplinary collaboration is best organised, and how to deal with e.g., formalization versus interpretative flexibility and case specificity, we intended to come up with insights to better inform, and deal with the ‘how to’ questions so many teams are struggling with. We depart from the assumption that some middle ground is needed to productively discuss these questions, and aim to come up with tactics to ‘prep’ the deliberative space to do so. Hybrid spaces could be constructed where all “sides” of the sciences come forth with the best they have to offer. Would such hybrid workflows flow? This is the experiment that is still to be done.

Be the first to reply