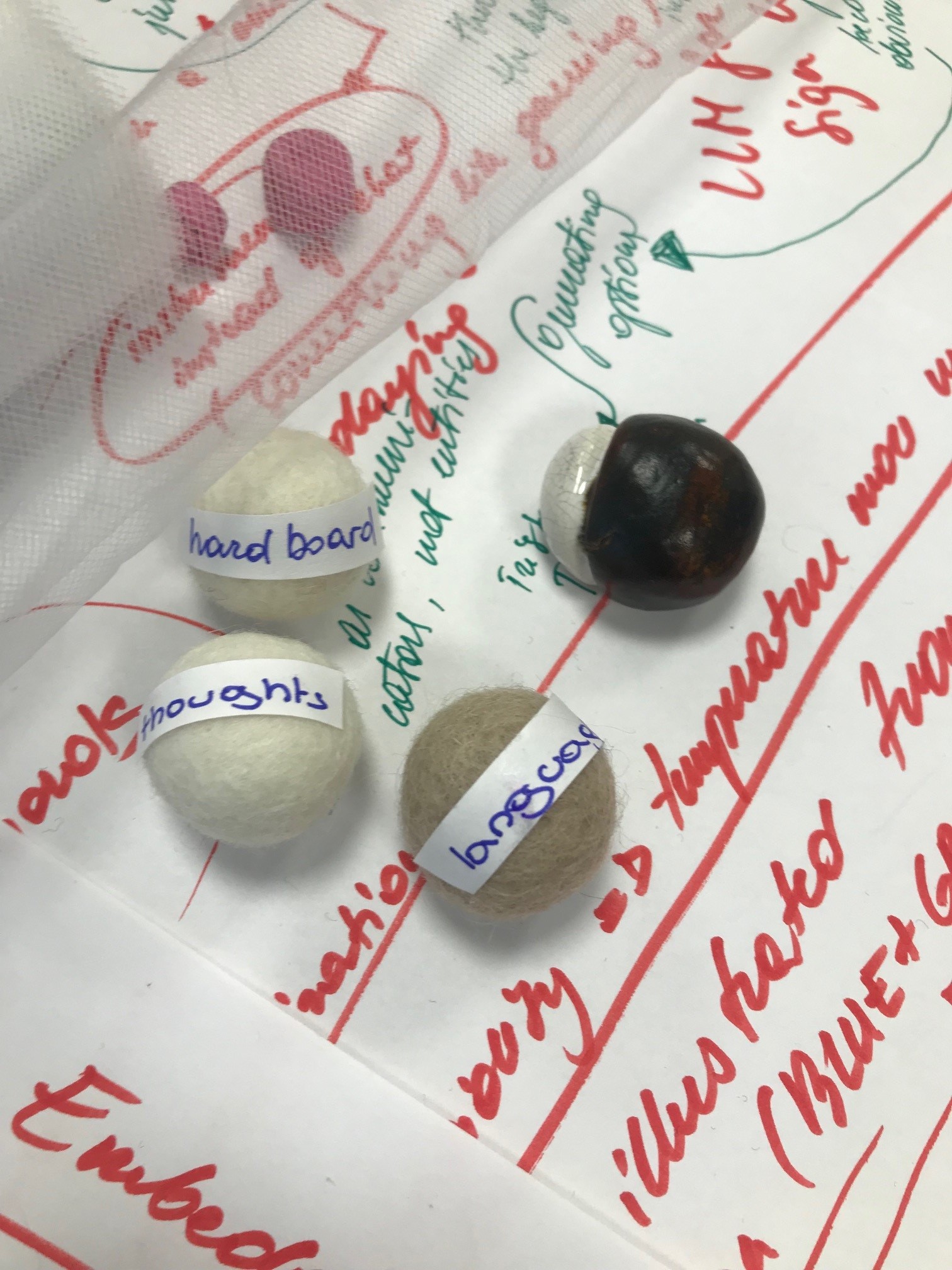

Tangible and embodied interactions – can they offer something for the way users understand and engage large language models (LLMs)? This is the question that we explored in studio (a.k.a. hands-on workshop) titled “Tangible LLMs: Tangible Sense-Making for Trustworthy Large Language Models” that PIT, namely […]

Tag: transparency

After Explainability: Directions for Rethinking Current Debates on Ethics, Explainability, and Transparency

EU guidelines on trustworthy AI posits that one of the key aspects of creating AI systems is accountability, the routes to which lead through, among other things, explainability and transparency of AI systems. While working on AI Forensics project, which positions accountability as a matter […]

Messy Concepts: How to Navigate the Field of XAI?

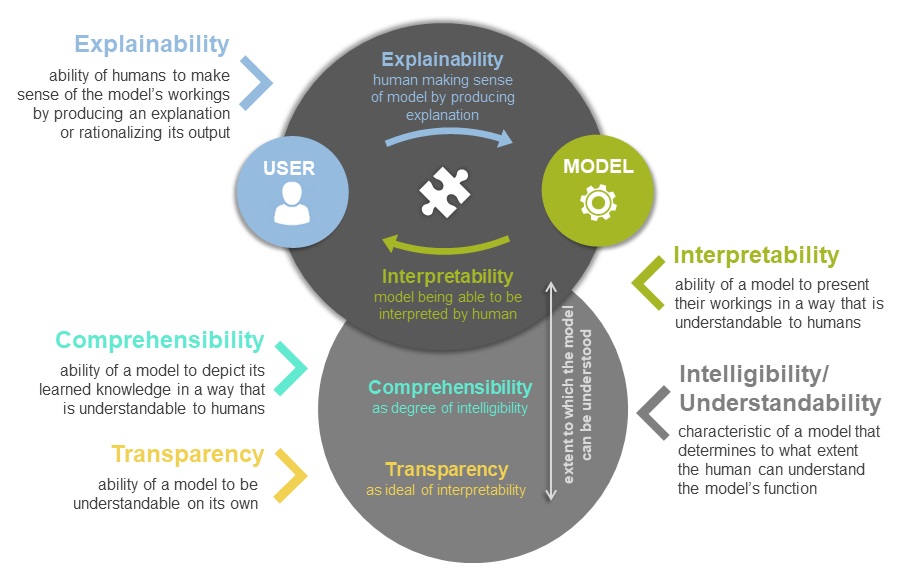

Entering the field of explainable artificial intelligence (XAI) entails encountering different terms that the field is based on. Numerous concepts are mentioned in articles, talks and conferences and it is crucial for researchers to familiarize themselves with them. To mention some, there’s explainability, interpretability, understandability, […]

Epistemic justice # under (co)construction #

This is the final part of a blogpost series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it as well as what is a […]

Experimenting with flows of work: how to create modes of working towards epistemic justice?

This is part three of a blog post series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it as well as what is a […]

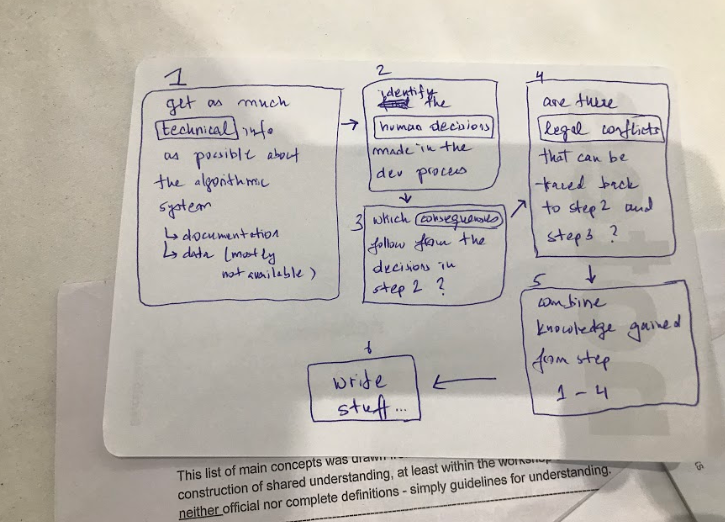

Finding common ground: charting workflows

This is part two of a blogpost series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it, read our first article here. This post […]

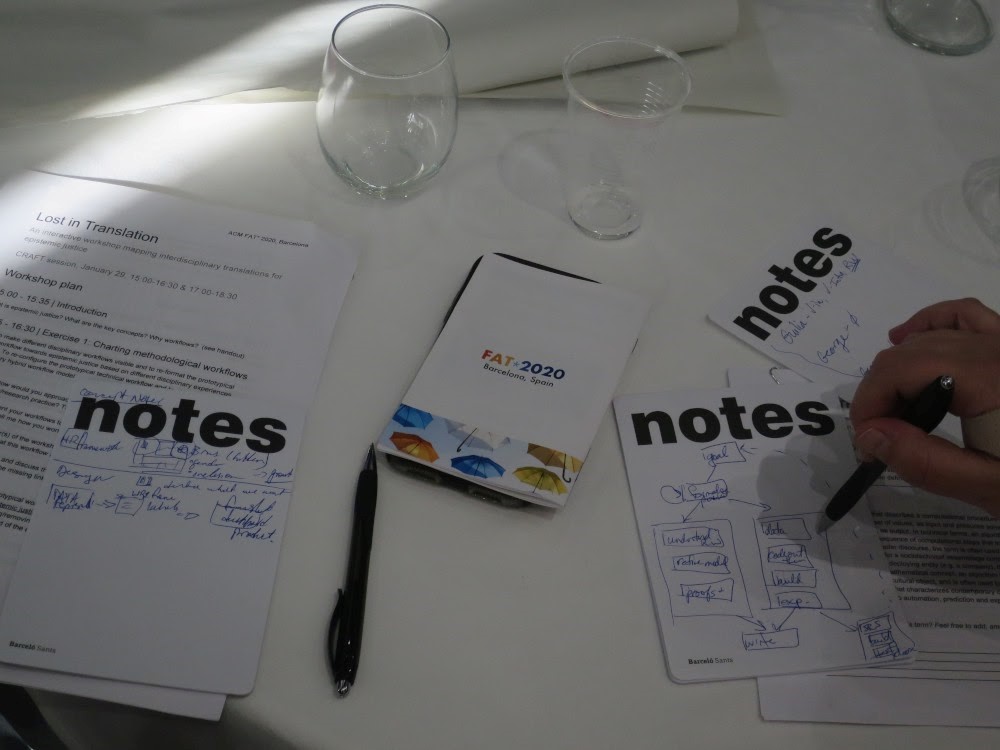

“Where is the difficulty in that?” On planning responsible interdisciplinary collaboration

By Aviva de Groot, Danny Lämmerhirt, Phillip Lücking, Goda Klumbyte, Evelyn Wan This is the first in a series of blog posts on experiences gathered during the planning, execution and reflection of our workshop “Lost in Translation: An Interactive Workshop Mapping Interdisciplinary Translations for Epistemic […]