This is part three of a blog post series reflecting on a workshop, held at FAccT conference 2020 in Barcelona, about machine learning and epistemic justice. If you are interested in the workshop concept and the theory behind it as well as what is a workflow and why we worked with them, read our first two posts here and here.

In this post, work group facilitators briefly report on some of what came out of the Translation cartographies part of the workshop. This part focused on identifying and mapping terms which were important for facilitating cross-disciplinary understanding, and we introduce here some of the workflows that participants generated. How did participants go about re-writing the “default” technical workflow? What new modes of working were proposed? What conditions (practical, conceptual, contextual) were necessary in order to enable the implementation of the workflow proposed by the group? Where were some of the frictions (contested terms, methods, understandings) that created disagreement or discontinuities in the discussion? Spoiler alert: the exercise of redrawing a technical workflow and identifying necessary terms and conditions for the new workflows to work, proved to be an ambitious feat to be completed within our allocated time. We knew that, the question was whether that was experienced as problematic. The jury’s still out, but we’ve already decided to build in at least some more breathing space in the next edition.

Outside ethics: cooperating around the AI black-box

The participants at this table remarked the flatness and generality of the standard workflow. From the beginning they wondered to what extent they could steer away from the workflow, testing different maneuvers to work with it. Should people position themselves somewhere “in the workflow”? It seemed that people’s disciplinary backgrounds shaped how they position themselves. A computer scientist at the table responded that he would locate himself “in the optimization part”. A sociologist noted that she would consider the organisational embeddedness of AI and how it responds to broader societal and policy problems.

As most people could imagine interventions during the design and use of machine learning algorithms, they attached sticky notes in the beginning and the end of the workflow. This included “feedback loops for user testing”, “emphasis on labelling data and how labels emerge”, “internal ethics reviews”, and “establishing and using checklists of ethical requirements”, among others. Interestingly, one person suggested locating ethics outside of the workflow – which was not contested but supported by proposals to establish “ethics offices”. These interventions – and the resulting workflow – reproduce the notion of AI as a black box where only input and output can be accounted for (and thus intervened upon).

Tough Love

From the get-go, this group of participants performed their inquisitive exchanges in a cooperative, problem solving spirit. Scheduling time to reflect (which we’d done but minimally) seems especially valuable in order for ‘things’ to come out in such a setting. An early note of a participant read “how much would I have to explain to make this point .. what is my stake in ending up with an outcome that I am behind?”

Eventually, no term was left unturned before adding it to their workflow redesign in what seemed a clear effort to uncover tacit disciplinary normativity, and arrive at interdisciplinary trust. The participant choir’s smooth tuning of their social-political-legal voices in the stage of ‘problem formulation’ contrasted with their lengthy quarantining of the CS contribution of ‘solvability,’ while they investigated it for negative performative (‘solutionist’) potential. Shared glossaries are loved for a reason (and ours did not include the term).

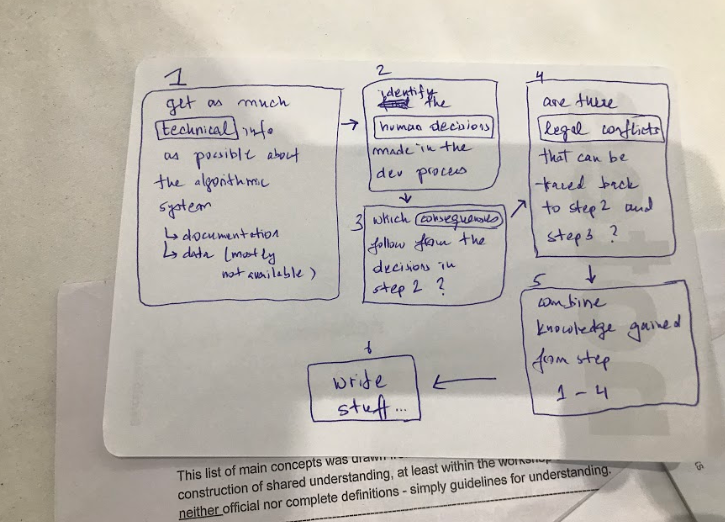

Around 18.30, a 5-step workflow was born under the name TAF ❤ (a.k.a. “tough love”), challenging the parent (venue) acronym. Although stages were progressively sorted (from problem, design and ex ante impact assessments to briefing of deployment and advisory teams, and ex-post impact assessment), strategic relations drew across boundaries and blue feedback loops attested to dynamic relations. The pre-final stage was headed by a big green post-it with a red exclamation mark, and the text “the goal gets redesigned in every step.”

Making the work flow and adjust: building feedback loops

This text was written by creators of the workflow as an annotation, and presented here without edits:

Our model is a non-linear model that is more dynamic than a default linear workflow. Our workflow is inspired by feedback loops. The major loop is to go from model readjustment back to task definition. We have different levels on which interdisciplinary work is conducted. Workflows are divided into research and other processes. Connections are not given between the different levels but they can interact. Workflows can be interdependent and interacting. For instance, one level is to question and define task definitions and see how this task definition. An important aspect of our workflow(s) is humility. This means that different actors are aware of different actors. Awareness is an act of engagement, taking into account different perspective and that the own perspective is not king. Another important concept that might be related to humility is bias. As researchers we are aware of our biases but also carry biases and embed them into research. Bias may be a contested term that can be positive or negative in different contexts. The disciplinary upbringing is a form of bias that we should be aware of. Workflow(s) need actors and humanization which means we have different faces involved in the research process. Especially to realize epistemic justice, we need to take different communities into account.

Be the first to reply